Welcome to the second write-up in the series of Strategic barriers for public cloud adoption by enterprises! The first post Strategic barriers for public cloud adoption by enterprises – cost v/s operational control was about cost and operational control preferences impact on cloud decision-making. Understanding cost and operation control structure is important for cloud consideration and so are other factors such the topic of this post, the dependency on connection and content itself.

When it comes to public cloud, the internet or WAN access cannot be ignored. Along with assessment of connectivity one also needs to understand the content transfer on wire for better cost benefit analysis. Network bandwidth and network latency are the two prominent factors that define success or failure of cloud implementation. Industry is full of success stories for could being used to host enterprise application workloads or key business driver workloads. A closer to look at these would reveal the most chatty and time sensitive business applications are still preferred to be setup on premise.

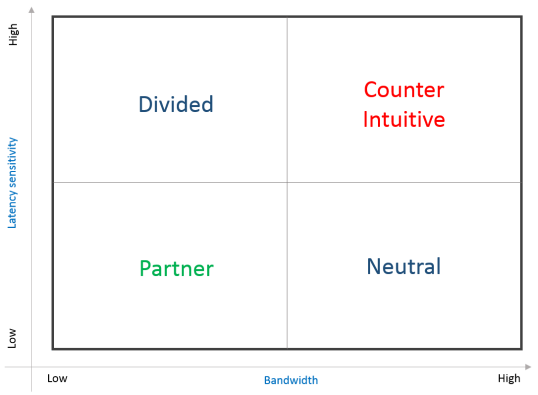

Consider the four quadrants above while migrating or recommending cloud for an enterprise. Most of the enterprise’s sentiments fall in one of the four quadrants. As highlighted in earlier post different departments or independent entities of large enterprises may need to be assessed independently. Below are the view points from enterprise perspective:

Cloud as partner: organizations that are located in prominent locations such as metros or large cities (generally referred as Tier 1 cities or T1 cities) with good internet or WAN connectivity would consider cloud as viable option. It is important to note that these organizations would have concentric location presence, in other words they have less or minimal presence outside of T1/T2 locations. The business critical applications are generally not time sensitive and can run with higher acceptable delays in network latency. Amount of data transfer over wire also would be small and predictable. The assessment of cloud implementation for this case cannot be done only at enterprise level, it also requires good understanding on presence of end users of applications being hosted on cloud.

For example, consider CRM application for sales team located in metros, it can be hosted on cloud as end users are located in metro cities (good connectivity is assumed).

Cloud as neutral impact: organizations with higher network latency (lower latency sensitive) and moderate to high bandwidth fall in this category. Typically these enterprises would see steady network bandwidth experience and would have good understanding on the actual requirement. This segment of applications generally cater to the needs of end users with majority of the traffic leading to revenue. CDN also may be integral part of decision-making by being one of the non-negotiable technology component of entire solution. Applications with higher ingress traffic than egress or having traffic within the cloud environment also would prefer to leverage cloud. Applications that receive market data to perform market intelligence to produce daily, weekly or monthly reports are classic examples. It might require appropriate scenario planning to highlight any benefits associated with moving to cloud.

Divided about cloud: applications with very stringent response times and having low-bandwidth requirements are generally divided on cloud adoption. The dependency on external partners to provide reliable connectivity services with strong set of SLA pertaining to timely response is the primary hurdle. Best connectivity options for cloud are available either in the form of internet or dedicated WAN lines, SLA of these providers need to be aligned and evaluated with OLA. Near shore cloud DCs are one of the options to consider, however, the last mile still at best can be on WAN requiring clearer understating about OLA definitions. Apart from the close proximity, it involves assessing and making changes to applications design for better cloud compatibility. The small data and lower latency attributes are typically associated with applications that are either chatty or transnational in nature. Concepts the low static data implementations such as lazy load, early load and effective caching are some of the options to evaluate.

Cloud as counter intuitive: organizations with high volume of data exchange within enterprise would be more inclined towards on premise setup. The stringent latency requirements of applications further strengthen the case. Organizations such as large media houses or documents scrutiny enterprises requiring internal employees frequent actions to content are good examples. These organizations generally prefer LAN over WAN/internet. Cloud is still an option provided some of these can be performed using the services such as remote desktop or desktop as a service offerings from cloud vendor. It is important to understand the amount of processing and user experience impact for end users. For example, experiences for high graphics content creation is still best addressed by desktops that the virtual desktops.

In summary, workload with very rigid latency requirements are generally preferred to be setup on premise. In addition to network latency, bandwidth also plays a major role in decision-making. It is important to understand percentage of users connecting to server via LAN, WAN and internet. Unlike cost based analysis, the business type and operating model needs to be understood in greater details along with applications design and possibility of design changes.